Or, how to use Signed HTTP Exchanges (SXG) for good by building a trustworthy software distribution method for the web.

Intro. In-Browser Crypto: It’s Everywhere.

In this post we will look at web applications deploying cryptography to protect users in the event of the service operator going rouge (or being hacked). Prominently among such applications are those offering end-to-end encryption (E2EE) for files storage, calls or messaging, wherein a malicious service provider cannot see the contents of the users communication because it is encrypted before being to the service. All such applications must use cryptography executed on the client-side, which in the case of web applications means Javascript or Web Assembly served by a webserver and executed in the users browser upon visiting the site hosting the application. Here is just a small selection of a diverse set of applications using this type of in-browser cryptography:

- Keybase for encrypted chat/signing attestations in browser.

- Proton Mail for decrypting PGP-encrypted mail in the webclient.

- Tutanota for decrypting mail/calendar contents in the webclient.

- Jitsi Meet for E2EE (end-to-end encrypted) audio/video calls.

- WhatsApp Web for E2EE chat.

- Wire (In Browser) for E2EE chat.

- The Ethereum KZG Ceremony for generating a trust setup.

- CryptPad for collaborative encrypted text documents.

- WebWormhole easy file transfer based on PAKE.

- Proton Drive for encrypted file storage/sharing.

- MEGA for encrypted file storage/sharing (with mixed results).

- Bitwarden for accessing the encrypted password store in-browser.

- And many, many more…

The motivations for providing these applications in the browser are the same as any other web application:

- Convenient: just to go

https://my-app.tldand the application is good to go. - Easy To Update: when the cache expires users automatically get the new version.

- Cross-Platform: Linux? Windows? iOS? Android? All you need is a browser.

- Sandboxed: the app runs separated from other applications.

What’s The Problem?

The fundamental security problem with deploying cryptographic applications in the browser is that the creators of the application may deploy a new version, at any time, undetected and may even target only selected users.

The first step of any in-browser protocol is the server sending the code for the client to execute, therefore by simply serving a targeted user a malicious version of the application, any and all guarantees ensured by any possible cryptography can be trivially bypassed e.g. uploading the key material to the service operator. As a result, the best one can usually hope for is “passive security”, i.e. security against an adversary which may snoop, but does not deviate from the protocol. This is the core problem with deploying cryptographic applications in-browser:

“Where installation of native code is increasingly restrained through the use of cryptographic signatures and software update systems which check multiple digital signatures to prevent compromise (not to mention the browser extension ecosystems which provide similar features), the web itself just grabs and implicitly trusts whatever files it happens to find on a given server at a given time.”

– Tony Arcieri (What’s wrong with in-browser cryptography)

“With regards to the ProtonMail web application, features such as Subresource Integrity (SRI) could arguably provide some increased authenticity to the code delivery mechanism. However, these features are deemed insufficient for ProtonMail to meet its security goals, and it is our conclusion that no webmail-style application could.”

– Nadim Kobeissi (An Analysis of the ProtonMail Cryptographic Architecture).

The state of affairs for browser-based cryptography is not great, but it is also not pointless: in-browser cryptography protects against future compromise of the server and limits the window of exploitation since the compromised server can only exploit people actively using the protocol e.g. victims who logged in while the service was compromised, as oppose to a hacker simply exfiltrating all plaintext user data from all users given brief access.

It is also worth remembering that often the alternative to in-browser crypto is no crypto. Nobody ever complains about the poor implementation of E2E encryption in the Dropbox or Gmail web interface: because it is simply non-existent. No matter how flawed, all privacy-respecting alternatives, whether they rely on in-browser cryptography or not, are an improvement over the status quo. And if the convenience of a browser application is required to get ordinary users to switch to a privacy-respecting alternative, then in-browser crypto is far preferable to the user sticking with existing services which are insecure by design.

What’s The Solution?

Is passive security inherent or can we do better in the browser in 2023? At a high level the solution to the problem outlined is obvious: we must devise a mechanism to limit the application developers ability to deploy new version at will, individually to each user, without leaving an audit trail.

An Unsatisfactory Solution: Browser Extensions

One solution that people have suggested to advert the woes of in-browser crypto is to instead deploy a browser extension: by using a browser extension, the application developer is forced to deploy the same version to all users though e.g. addons.mozilla.org, with the assumption that any malicious behavior is therefore more likely to be detected. Additionally it leaves a compromised version of the application on the distribution service, which can later be used to prove that security was compromised.

This implicitly require the users to trust Google / Mozilla, which could of course collude with the application developer to still enable a target attack. A larger issue is the compounding security issue of having everyone deploy browser extensions: you trust every extension with everything, namely, these applications (usually) run outside a sandbox and therefore a single malicious app can now negate the cryptographic guarantees of all your applications. So although it might work for a small number of applications, is inherently not scalable from a security perspective. Having to install a browser extensions is also a barrier to adoption and requires distinct extensions for each browser.

Proposal: Host The Application On a Domain Outside Your Control

Luckily there is actually a way to prevent yourself from distributing a new version of your web application to targeted users: serve it from a domain you do not control, i.e. a domain for which you do not have a TLS certificate. This way whenever the application must be update the new version must be provided to whomever controls the distribution domain, these people can then maintain an achieve of previous versions or even build it from source themselves (like F-Droid).

But wait: if you serve the application from a domain controlled by someone else, you are now simply trusting them to not serve a malicious version! What have we gained?

While we would gain nothing by simply outsourcing the hosting of the application, what if no one controlled the domain? What if we could “lock ourselves out”? What if it took multiple people / authorities to sign off on a new version of the page before it would be served from the domain? The goal of this blog post is to observe that we can achieve this, with practical efficiency, using existing web technologies supported by Chrome, together with threshold EdDSA / ECDSA signing and distributed key-generation protocols.

Signed HTTP Exchanges and Threshold Crypto

The solution is going to crucially rely on three technologies: SXG, threshold cryptography and the web PKI, including its certificate transparency logs. So we need a (very) brief introduction to all these.

What Are Signed HTTP Exchanges (SXG)?

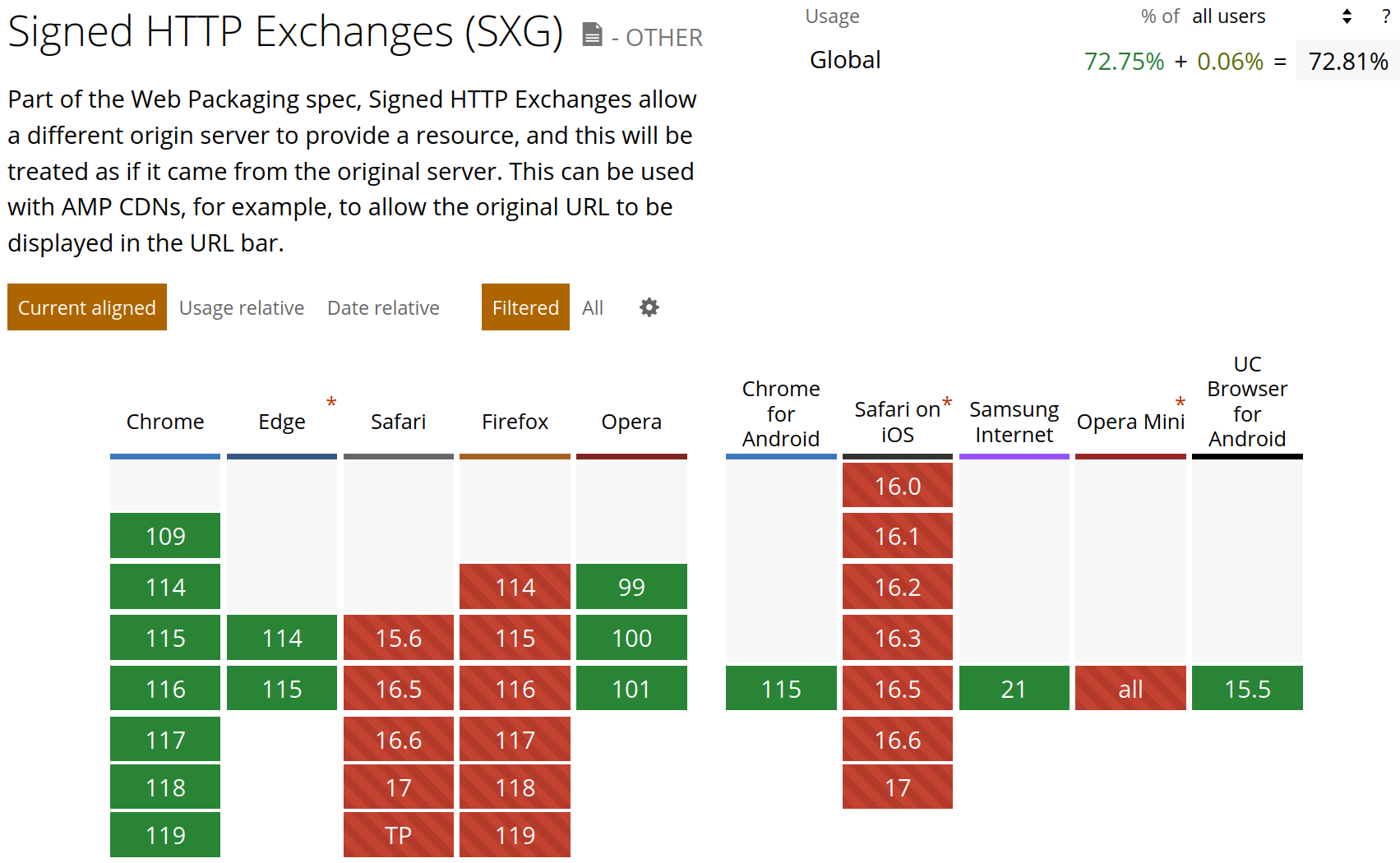

Signed HTTP exchanges is a (recent-ish) browser technology adopted by many Blink-based browsers.

I won’t explain SXG in detail, if you want more details check out web.dev’s Guide on Signed Exchanges (SXGs).

The crux of Signed HTTP Exchanges is, well, to sign HTTP Exchanges. At a high-level the idea is rather simple:

- The domain owner obtains a special SXG certificate: a X.509 cert with the CanSignHttpExchanges extension,

for the domain in question, e.g.

my-app.tld. This certificate is in addition to any regular TLS certificate.

- When a request is made to the origin TLS server with the

Acceptheader set toapplication/signed-exchange;v=b3the server returns a “signed exchange” which is the server cryptographically signing the HTTP request/response pair using the SXG certificate.

The point of signing the request/response is that this “signed exchange” may now be served by anyone on behalf of the destination site.

The signed exchange is served from a different domain, e.g. by google.com, when listing search results: it’s a document signed by my-app.tld served though a TLS connection to google.com.

Whenever the browser encounters a valid a signed exchange

it treats it as if the content was served directly from the origin, exactly as if it made the original request to the origin, got the response and cached the response:

“Since the exchange is signed and validated against your certificate, the browser trusts the contents and can display the content with attribution to the original URL. Now, when the user clicks on the link to view the contents, it magically loads instantaneously from the local cache.”

Hence the user browser never contacts the origin and in particular never establishes a TLS connection with anyone having a valid TLS cert for my-app.tld.

As you might imagine, this technology changes the security model on the web substantially,

namely, anyone can serve content on behalf of anyone provided they have a valid signed exchange.

For this reason SXG has been controversial. So far SXG has primarily been used to speed up delivery of webpages accessed from Google search, was proposed by Google originally and only implemented by Blink-based browsers, therefore it is widely seen as a “Google technology”. To the best of my knowledge, this post is the first proposed to use SXG to enhance software distribution on the web.

Another notable consequence of SXG is that (some) content on the web how has non-repudiation: meaning you can cryptographically prove, to a third party, that a given site served a given response – this is not possible using the TLS exchange where the contents is only authenticated using an AEAD.

The consequences are similar to those of DKIM for email.

A Brief Intro to Threshold Cryptography

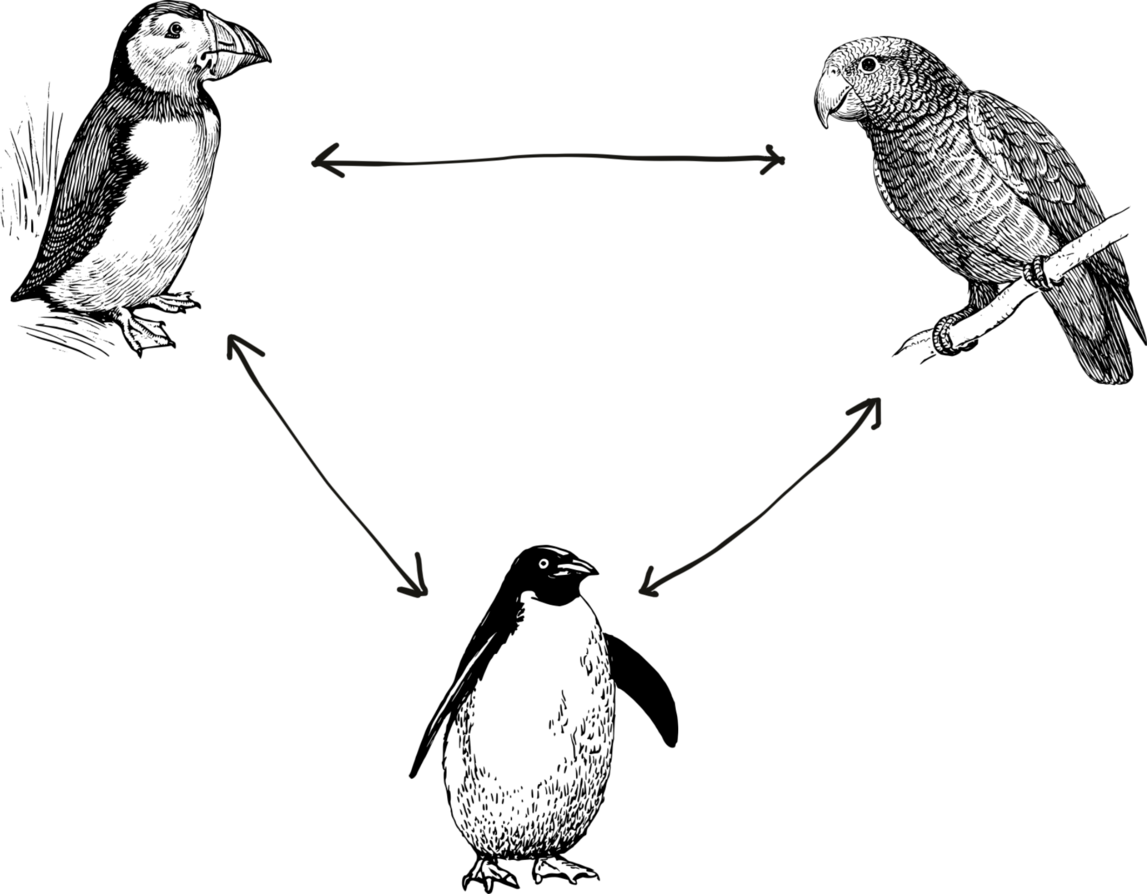

Threshold cryptography is a special case of multi-party computation, enabling two or more parties to jointly execute cryptographic operations, usually signing or decryption. In our case we are interested in protocols which enable the parties to jointly generate a signing key in such a way that no single party learns the signing key: the key is secret shared among n parties (below is shown n = 3), requiring at-least t ≤ n of these parties to come together in order to reconstruct the signing key. This problem is called Distributed Key-Generation – because it generates a secret key in a distributed way. At the end of the protocol the parties (birds) obtain a public key (the signature verification key) and a share of the corresponding signing key.

In order for this to be useful for us, we also require a way for these parties to jointly sign a message using the secret-shared signing key. Such protocols are called threshold signing protocols or in the simpler case, when n = t i.e. all parties need to cooperate to generate the signature, they are called multi-signature protocols.

Note that these should not be confused with the related notion of “multisignatures” in e.g. Bitcoin, in which each party independently generates a signatures, whereas in our case a single signature is generated by multiple parties. The resulting signature is exactly the same as if it was generated by a single party holding the signing key, hence the party verifying the signature need not be aware that the signature was generated in a distributed way or how many parties were involved.

Examples of such protocols include the simple 3-round scheme by Lindell or the improved 3-round Sparkle scheme by Crites, Komlo and Maller for EdDSA, or the more complicated 2-round FROST2 scheme by Bellare, Crites, Komlo, Maller, Tessaro and Zhu. Because Schnorr signing is “linear in the message” threshold signing for Schnorr (EdDSA) is very clean, the story for ECDSA is slightly more nasty, because of the field inversion required in signing, but still very practical.

Current protocols are practical for tens to low hundreds of parties.

Signed Exchanges from a Domain No One Controls

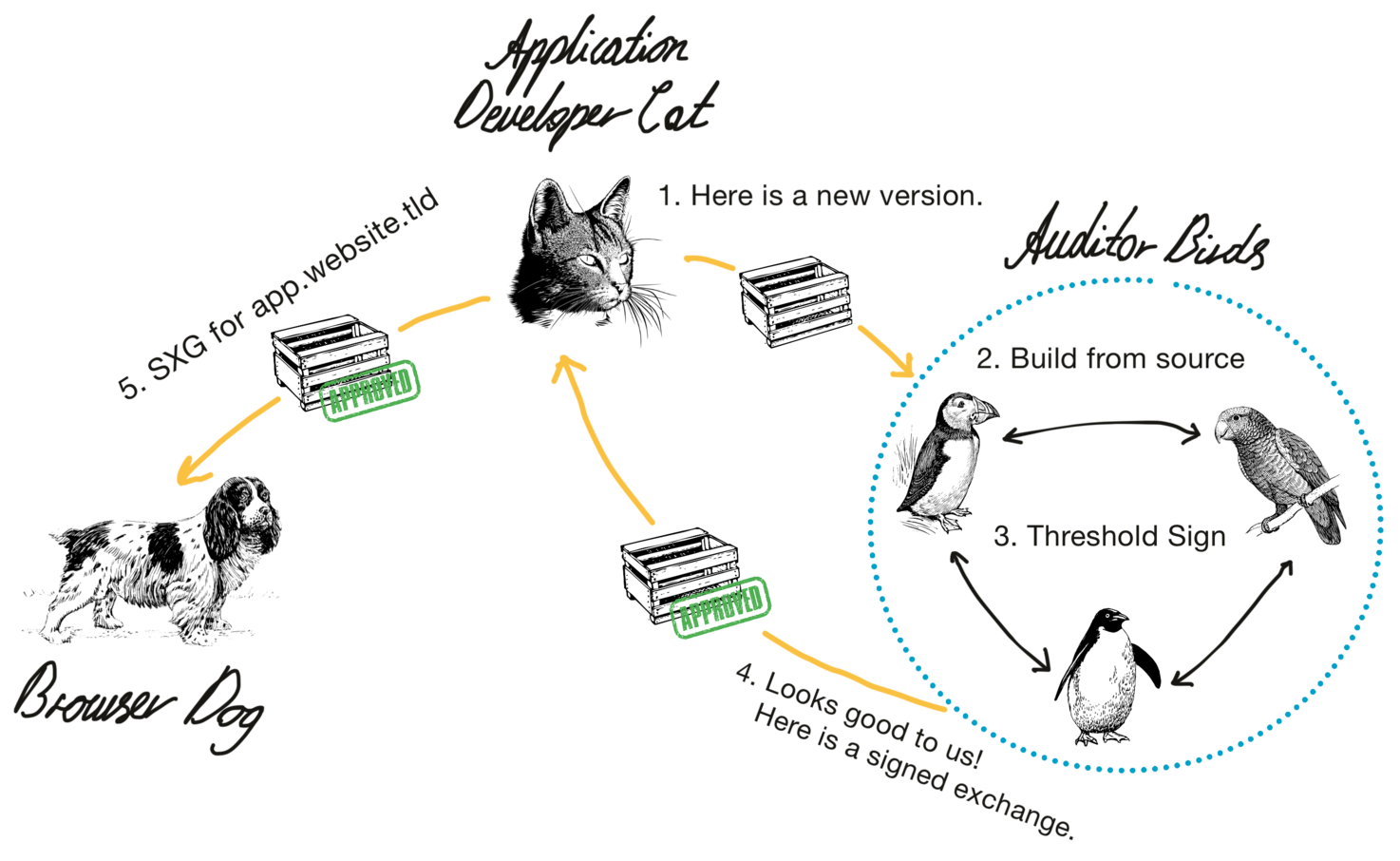

So now we are ready to apply these tools to improve software distribution on the web.

At a high-level the idea is simple: we will have a domain (e.g. a subdomain, app.website.tld) for which no TLS certificate exists and the signing key of

the SXG certificate is secret shared among a set of independent “auditor” parties (the birds shown above).

A majority of which are assumed to be honest.

Hence the only way to load the https://app.website.tld page is by obtaining a HTTP exchange signed by the honest majority of auditors:

it’s not possible to create a TLS connection to the domain.

Even if < t of the auditors are corrupt, they cannot publish a malicious update by singing a HTTP exchange serving the malicious version.

From the browsers perspective the signed exchange just looks like a regular exchange signed by a centralized party,

which in-turn looks like a TLS connection to app.website.tld from the users perspective (looking at her address bar).

Getting a Secret-Shared SXG Certificate

In order to obtain a certificate for which no one knows the signing key, the auditors run a distributed key-generation (DKG) protocol to generate a fresh public key. Then they run a threshold signing protocol to generate a certificate signing request for the SXG certificate and provides the CSR to the domain owner (i.e. the application developer).

The domain owner either provides this certificate signing request to a traditional CA supporting SXG (currently only Digicert) or uses ACME (only the Google CA supports this) to obtain the SXG certificate – for which he does not know the signing key. Note, this subdomain would not even have a valid TLS certificate: SXG certificates cannot be used for TLS connections and having a separate TLS certificate would enable serving a new version of the app directly to the client – which is exactly what we want to prevent.

The certificate transparency logs are monitored by the auditors to ensure that no other certificate exists for the subdomain and that the untrusted application developer actually uses the public key generated by the auditors and not a maliciously generated key (e.g. one he generated himself). The auditors keep monitoring the log to ensure that no other certificate is created in the future as well. Hence, even though the domain is under the control of the untrusted application developer, if he obtains another certificate he is immediately detected and it is a clear sign of malicious behavior.

Deploying a New Version

When a new version of the application must be deployed, the application developer simply submits it to the auditors parties which can run any type of “verification” on the submitted artifacts, e.g.

- Pulling the source from the public Github.

- Checking git signatures.

- Building the application from source.

- Posting the source code to a public repository.

- Enforcing a delay after submitting an update: allowing time to catch a malicious update.

The auditors then create HTTP requests/responses serving the files of the new application and

sign these exchanges using threshold EdDSA producing a signed HTTP exchange.

Note that by combing SXG with Subresource Integrity for all sub-resources,

only the request for the “landing page” needs to be signed directly:

everything else is implicitly signed and can be served directly by the untrusted application developer on a separate domain, e.g. resouces.website.tld.

Hence the only page signed by auditors could be as simple as an empty HTML body and a script tag with an integrity field which has been computed by the auditors.

This signed HTTP exchange is then given to the application developer and

can be served by an untrusted centralized server or a CDN – since no TLS connection to app.website.tld is required.

The auditors are not involved in serving the application:

the parties need only be online when a new version is deployed or when the signed exchange expires (e.g. once a day),

the rest of the time they simply monitor the Certificate Transparency logs.

The User Experience

The user experience is the same as if the application was served directly from app.website.tld, because, in a cryptographic sense, it is.

The end-user has to verify that the auditors are indeed managing app.website.tld e.g. by checking the auditors websites to ensure it occurs on the list of “managed domains”,

she also needs to verify that the domain in the address bar does not change.

However because the application developer does not control any valid certificate for the domain, the application cannot be altered without either:

- Registering a new certificate for

app.website.tld: which would be observable in certificate transparency logs and immediately detected by the auditors who would inform the world of this out-of-band e.g. a public notice on social media or their website. - Colluding with sufficiently many auditors to sign a new malicious version of the app. This risk can be minimized by having a large number of auditors, and/or setting the threshold t very high, e.g. t = n requires all the auditors to be malicious. The attack leaves a signed exchange behind which can be used to prove malicious behavior.

- Uploading a new malicious version to the (honest) auditors: which results in the source code getting published and the application being build from source (or whatever other policies are enforced): just like uploading a malicious version of a Android app to F-Droid. This is exactly what we wanted to ensure.

Build & Sign Webpages as a Service

By getting together a set of trustworthy, reliable and geographically distributed parties to act as auditors we can create automatic code-signing for arbitrary web pages. The solution scales well to auditors signing many apps deployed by different developers: since the auditors only interact when a new version is released and nothing in the flow above requires human interaction on the part of the auditors.

Usability for the end user can be improved by having the auditors offer their own domain for hosting the signed applications, i.e. rather than app.website.tld

the app would be “hosted” on app-website.auditors.tld,

meaning the end-user would not need to know the particular domain managed by the auditors for website.tld to be ensured of the update mechanism,

but could simply verify that the domain is a subdomain of auditors.tld.

None of these domains would have valid TLS certificates, just like app.website.tld.

A drawback of using SXG in this way is that it “breaks” the address bar: the user cannot bookmark the url e.g. “https://app.website.tld/email", because if she navigates to “https://app.website.tld/email" directly without a cache hit, the browser will attempt to create a TLS connection to “app.website.tld” – which it cannot. Therefore the user must visit e.g. “https://website.tld/email" which then sends the exchange serving the email application and redirects to e.g. “https://email.website.tld”. This can be somewhat mitigated by using unique URLs for every version of the app and having the cache never expire (by setting “Cache-Control” to be very high on the signed exchange), the application itself can then check if it should “update” by redirecting to “https://website.tld” which will load the new version: this way the user can still go directly to the URL; as long as she does not clear the browser cache…

Conclusion

SXG offers a way to sign web applications, so far people have been using it for serving webpages faster, but by combining it with threshold cryptography we obtain a practical solution to previous objections to in-browser cryptography: a secure software distribution channel for the web. The proposed solution offers security that is at-least as good as distributing native application – which must also be signed by some trusted distribution service.

The primary draw-back of the proposed solution is that it relies on SXG, which is controversial (the official Mozilla stance is “negative”) and only supported in Blink-based browsers, however, since it can exist along-side with the original distribution method it might be worth protecting the ~70% of users which can make use of this technology.

Thanks for reading.

If you are interested in this, feel free to ping me.